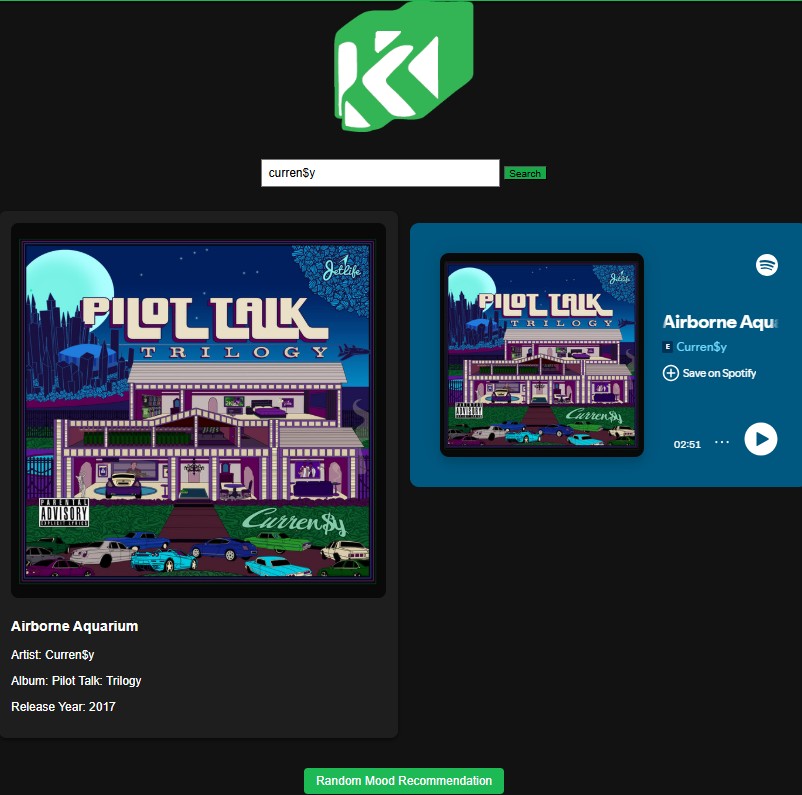

Spotify Mood Creator 🔗

📌 Where It Started

A challenge was set—build something from scratch using an existing API. Given only three weeks, my friend and I took it upon ourselves to push the limits of Spotify's functionalities. The core idea? Mood-based recommendations, letting users explore new music without committing to rigid playlists.

🤖 How AI Became Part of the Process

AI wasn’t just a tool—it was like having an extra brain in the room. We used it to interpret API structures, generate usable code, and troubleshoot inconsistencies when things weren’t lining up. It wasn’t perfect, but the process taught us just how effective AI can be in accelerating digital product creation when time is short.

🎶 What the App Actually Does

Users log in and start exploring. The system analyzes mood-based factors like time, weather, genre, song key, and pitch, then suggests tracks that match the moment. It doesn’t interfere with Spotify’s native playlist system—it works alongside it, serving as a test space for discovery that could, over time, refine a person’s listening habits.

🧐 Lessons Learned & What Could Have Been Improved

The app worked—but the mood detection? That’s where things could’ve been sharper. Given more time, deeper user research and AI assistance could’ve fine-tuned the recommendation engine, making it feel less like an algorithm and more like a true personal assistant for music discovery.

⚡ Three Weeks, Tight Execution, Big Takeaways

With little time, limited assets, and no graphic design expertise, we kept the visuals simple but made sure the concept shined. The project showed that when time is short, you have to focus on core interactions and embrace iteration. Even as a rough prototype, the potential was there—a functional case study proving that the intersection of AI, music, and UX design is something worth exploring further.